Automatic Text Recognition

What is Automatic Text Recognition?

Automatic Text Recogniton (ATR) transforms your paper document piles into a searchable, editable and analysable digital format.

What is Optical Character Recognition (OCR)?

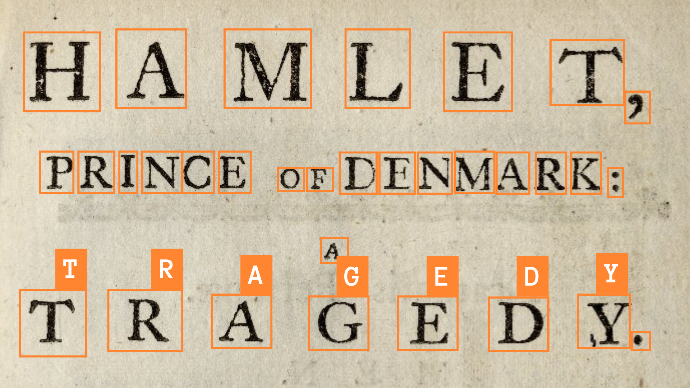

OCR (Optical Character Recognition) is the traditional method for recognizing printed text: it analyses individual character shapes and matches them to trained fonts. It works well when characters are clearly separated, fonts are standard, and image quality is good. Otherwise the accuracy degrades quickly.

What is Handwritten Text Recognition (HTR)?

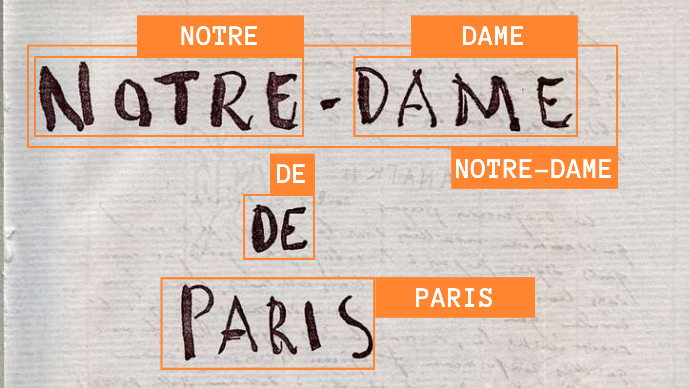

HTR (Handwritten Text Recognition) is designed for recognizing handwritten text (or mixed pen-written styles). Unlike OCR, HTR uses models that consider entire words or lines of text, combining optical information and linguistic context to produce more coherent transcriptions.

What about Automatic Text Recogniton (ATR)?

ATR is the convergence of OCR and HTR technologies. Over the past decade, with the development of deep learning algorithms,

the boundaries between OCR for printed

documents and HTR for handwritten documents are blurring. The models recognise both printed

and handwritten text and process lines or paragraphs.

Our ATR processing capacities

Multi-line transcription

Mixed handwritten and printed text

All script types (Latin, Arabic, Cyrillic...)

Historical and modern handwriting

Ancient languages

Enrichment with metadata

La méthodologie ATR unique de TEKLIA

Our advanced Automatic Text Recognition (ATR) services are powered by the latest advances in deep learning technologies. We train fully customised models designed to accurately understand and transcribe rare language scripts and complex handwriting. TEKLIA has developed two different approaches for historical document processing:

Sequential ATR method

Integrated ATR method

In more complex scenarios where the page layout does not conform to a standard structure, a canonical reading order for the entire page may not be feasible. In such cases, TEKLIA offers an integrated end-to-end approach using a single deep learning model. The model's learning is based on the patterns and structures present in the training data, enabling it to accurately determine the reading order of text zones even in complex and diverse layouts.

Q&A Document specificities

Text line detection in any orientation:

Our text line recognition models (Doc-UFCN , YOLO V8), are adept at

detecting text lines in any orientation (0 to 360°). These models are

designed to accurately detect text lines regardless of their rotational

position.

Determine the reading direction:

Once the text

lines have been detected, the reading direction is determined by one of

two methods. The first method involves training a classifier

specifically for this purpose, to predict if the reading order is

right-to-left or left-to-right. The second method is to perform text

recognition in both possible directions and then select the result with

the highest confidence. Note that both Doc-UFCN and YOLO can detect and

classify horizontal and vertical lines.

Additionally, integrated models such as the Document Attention Network (DAN) have the capability to predict the language concurrently with the text transcription, enhancing the efficiency and accuracy of processing multilingual documents. We are currently experimenting with DAN to recognise documents containing languages written in different directions (French and Arabic).

Q & A Quality Control

Arkindex for Exploration: Arkindex is our first tool designed to visualise the results of automatic transcription and compare them with the corresponding images. It allows users to view transcriptions at different levels - word, line, paragraph or page. In this interface, the relevant element in the image is highlighted and the transcription is displayed in a detailed panel. This panel includes the confidence score, the source of the transcription (algorithm, model) and a link to the execution process that produced this transcription. Arkindex is particularly well suited to exploring the results of transcription, providing an intuitive and informative interface for users to delve into the details of the transcription process and its results.

Callico for evaluation and validation: Callico is designed for evaluation or validation campaigns where a team of annotators evaluates or corrects the results of automatic transcription. This tool provides a comprehensive workflow management system for handling validation campaigns involving a large number of documents and annotators. As well as facilitating the validation process, Callico also provides an evaluation of the Character Error Rate (CER). It also allows all transcriptions to be exported in CSV or XLSX format for further statistical analysis. This makes Callico an invaluable tool for teams undertaking systematic and large-scale evaluation or correction of automated transcriptions.